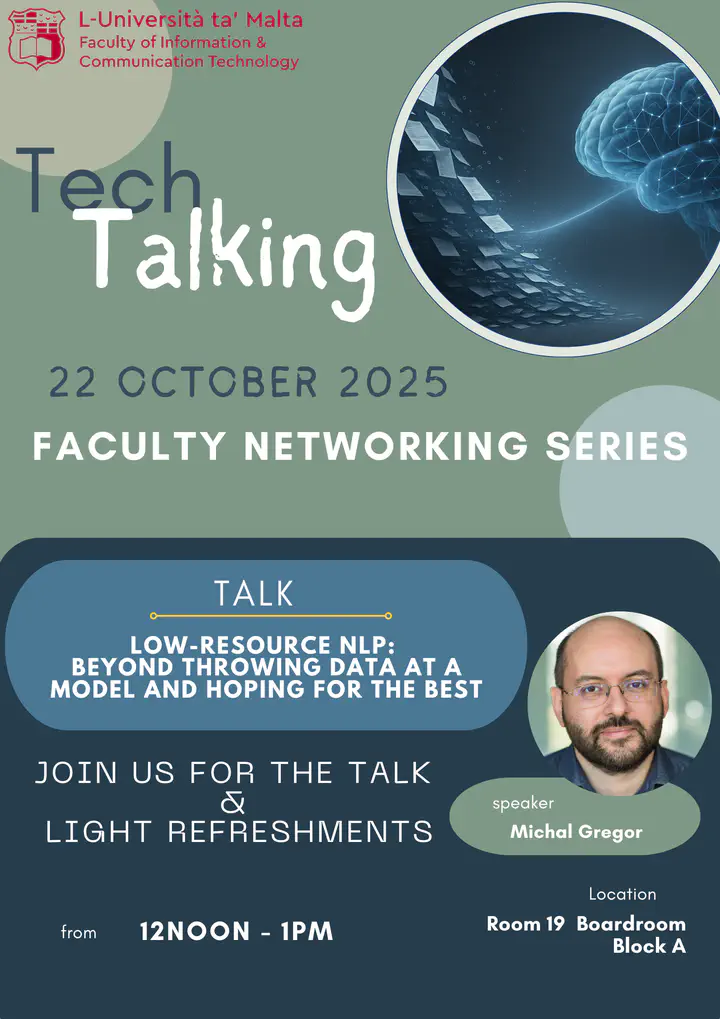

Low-Resource NLP: Beyond Throwing Data at a Model and Hoping for the Best

Abstract

The talk will argue that machine learning – even in the era of deep learning and large language models – is not just about throwing increasingly large amounts of data at models and hoping for the best. It will leverage examples from recent literature to demonstrate how our lack of understanding can sometimes severely limit the capabilities of our models. It will then translate these insights into the context of low-resource NLP and explore several avenues that might potentially improve the efficiency of adapting LLMs to low-resource languages in the future.

Date

Oct 22, 2025

Event

Tech Talking; University of Malta

Location

Msida, Malta